If you think your problem is your CI tool, think again.

Chances are it’s just a knee jerk reaction. You probably have a problem with your pipelines.

In this blogpost, I will be focusing on on-prem CI tools (when you host both server and agents); the discussion is slightly different for Cloud CIs (e.g. TravisCI, CodeShip, CircleCI), and I will leave that to another post.

As it’s 2018, chances are you all are using some CI tool (e.g. Jenkins, Bamboo, TeamCity, GoCD, ConcourseCI, BuildKite, Spinakker) to run your continuous integration tests (unit, integration, end-to-end, and so on).

Also, you are also probably familiar with the concept of Deployment Pipelines.

At an abstract level, a deployment pipeline is an automated manifestation of your process for getting software from version control into the hands of your users. Every change to your software goes through a complex process on its way to being released. That process involves building the software, followed by the progress of these builds through multiple stages of testing and deployment. This, in turn, requires collaboration between many individuals, and perhaps several teams. The deployment pipeline models this process, and its incarnation in a continuous integration and release management tool is what allows you to see and control the progress of each change as it moves from version control through various sets of tests and deployments to release to users.Continuous Delivery, THE book

Note that your pipeline should be represented in a CI tool. It doesn’t mean that your CI tool should define your pipeline.

Your CI tool is dumb

Some people like to think about the CI tool as the deployment pipeline orchestrator, the tool which will have the definitions of all pipelines and it will automatically trigger a new pipeline chain (a new release candidate) per commit. It will store information about the each release candidate (based on green/red results) and what has been deployed when and where.

While that definition is true, I think it misleads people into thinking about it as a magic black box. A sausage factory for production artefacts. In my opinion, that’s counterproductive.

I like to say that CI tools are distributed arbitrary remote code executors.

Distributed because the server dispatch jobs, and agents/slaves will pick the jobs, execute them locally, and submit results (and sometimes some result files) back to the server.

Arbitrary because the agents will execute exactly the commands defined by the user.

So effectively your CI tool is just a job scheduler. It will run the commands you define, on the machines you maintain. That means that a CI tool cannot protect you from yourself.

Oh, small secret: those nice GUI-oriented tasks on your CI web interface are most likely just wrappers for command line tools installed on the agents.

Everything is CI

But your CI is a lot more than a CI tool, you actually have a whole ecosystem behind it. For example:

- CI server

- CI agents/slaves

- java

- maven

- npm

- node

- docker

- …

- Build Dependencies

- Git repository

- Maven repository

- Docker registry

- Npm repository

- CI environments (Dev, QA, and so on)

It’s not jenkins fault if agents’ authentication to nexus is failing; it’s not TeamCity fault if your agents don’t have connectivity to the DEV environment anymore. You can’t blame your CI tool if you didn’t provide enough agents and builds are queued. It’s not Bamboo fault if some other build left files behind and broke your build - the agent did run exactly the commands it was told to do.

I can tell you this by personal experience: of all the components in CI-land, the CI server is the one least likely to give us trouble. The easy part is maintaining that service running.

Why there are so many CI tools?

While all CI tools are job schedulers, they are still different job schedulers.

They can vary on how the pipelines are created (only via code, via web interface, both, some other frankenstein monsters too), how results can be visualised, how easy it is to create new agents on a certain infrastructure provider, what kind of tricks it allows for different processes.

Depending on your CI tool, it might require more code and effort to get to the desired result.

Jenkins is the CI grandapa - it was the king when only CI existed, not really CD. It doesn’t really have the concept of pipeline. While you can possibly do everything you want, that will require a lot of plugins and a lot of workarounds, with varying amounts of broken. It does require a bit more code and energy to get it to an acceptable state as pipeline tool.

The second wave of CIs (e.g. TeamCity, Bamboo, GoCD) focused a lot more in features like maintainability, CD, pipelines, value-map streams, branch builds and other workflows. Build as code wasn’t a big thing.

The third wave of CIs (e.g. Spinakker, BuildKite, ConcourseCI) leveraged build as code and cloud providers.

There’s no perfect CI. They all have different features.

Should I change my CI tool?

First answer is no. It’s very rare to see a single case of a CI tool change that actually delivers what the business case suggested it would.

Changing the CI tool tends to be a big waste of money for the company - and developers are not more satisfied either with their new CI tool. Costs and effort are always underestimated, heavily. Your pipelines are a lot more complicated and interact with a lot more subsystems than people realise. There’s a surprising amount of glue code which relies on your CI tool specificities.

Assuming you have a working CI tool, with all your proper pipelines in place, you’ve already spent some time customising it. A new CI tool means more servers, more ops, more automation, more security assessments, migration, more glue code, learning and adapting every single pipeline you have to new workarounds, authentication, permissions, training, a new learning curve. Those all cost money. Anything you estimate, make it at least double.

The reason a company buys a CI is not out of generosity; it’s buying a piece of software which will actually increase developers’ and ops’ productivity. The CI is not a business deliverable, it’s a tool to aid developers and improve quality and speed of the products being delivered.

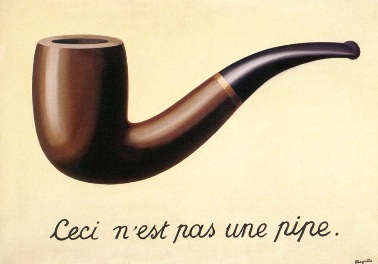

Ceci n’est pas une pipe

“Oh yeah, Bamboo, the tool everyone loves to hate.”

I have the opinion that as far as you use any CI tool enough, you will hate it. If you don’t hate it, maybe you are just not using it enough. Maybe the grass is just greener on the other side. Changing CI tool seems to be quite like hitting your foot with a hammer to forget a headache.

I keep asking myself, why some developers are always so certain that changing the CI tool to some other specific tool is the solution they need?

Is it because CI is such a central tool? Is it because the management wouldn’t block the change for once? It is because of all the other CI problems actually manifested themselves on the CI tool screen, of some sort of collective exposure trauma? Is it because people simply don’t understand that was broken, and blame the CI tool? Maybe people want to believe that this new relationship will be totally different, and of course you won’t accidentally do exactly the very same mistakes with a new person?

I’ve seen people migrating to (and from) GoCD because they wanted every single artefact in the company to be part of the same pipeline/stream map. Just because GoCD allows you to do that, it doesn’t mean you should - even the Continuous Delivery book tells you it’s a very bad idea. Also, it doesn’t mean you are forced to do it just because you are in GoCD!

If your life is hell because you tangled all your dependencies, fix your pipelines! Don’t blame GoCD for your bad pipelines.

Some people went to Jenkins because it’s free. Except it’s not. The cost to maintain and ongoing customisations it is far more expensive to the company. The cost of CI tool license is peanuts compared to the cost of actually maintaining it. (As we are here, if you use GoCD and have more than 15 people in your company, pay the license fee - to use a proper database).

Are you actually blaming Bamboo because you decided to not use its plan/pipelines the way the tool was designed to be, but rather jobs like Jenkins? Yeah, nah.

You didn’t create a pipeline in your CI tool and everything is a mess? How’s that caused by your CI tool choice?

What’s your problem?

Every CI tool needs customisations (plugins, configurations) to be in a good state. CI tool is not something you just grab it from the shelf and use. It’s a pretty flexible and demanding part of the development workflow, and it needs attention.

What’s the underlining problem you are trying to address when you want to change the CI tool? Ask that carefully. Chances are the problem is a completely different thing, just being masked as CI tool hate. You should fix the problem you have, not the problem you want to believe you have.

You can critique tools because they incentivise the wrong behaviours. You can also critique tools for being opinionated (and opinions you disagree with).

I do believe that, while there are legit reasons to migrate CI tools, I’ve never seen them happening in real life. Even Jenkins can serve as a good CI tool, and sometimes it had enough love and care to not be worth migrating.

It’s not a matter of what’s the best CI tool, but if there’s real benefit on migrating. Benefits for the company and for the teams.

Comments

Timur Batyrshin

25/09/2018

I know a couple of reasons for migration but these are not the ones you’d usually think of: * lack of stock plugins/customisations in current build tool * migration from pipeline as “clicking in UI” to pipeline as code – to a tool that supports that

First reason is usually a consequence of bad decision during previous CI migration :-D Second reason is becoming less relevant with releases of Jenkins Groovy DSK and Teamcity Kotlin DSL.

Cintia

01/10/2018

Moving from/to on-prem to cloud and build as code are valid reasons.

While most CIs now support build-as-code (I think the exception is Bamboo, they created an SDK for some reason).

Marek

24/10/2018

SDK? I guess you are talking about Bamboo Specs, in particular the Java flavour?

https://confluence.atlassian.com/bamboo/bamboo-specs-894743906.html

Yes. There is no SOME reason, but MULTIPLE reasons why a robust configuration-as -code requires a high-level programming language. Configuration IS a code. No matter if written in SHELL, JSON, YAML, Groovy, Java or anything else. And if this is a code, we shall maintain it as code. Think about things like:

See more reasons here: https://confluence.atlassian.com/bamboo/bamboo-java-specs-941616821.html

I highly recommend first: to get familiar with it, second: to try it out in practice. You will very quickly see superiority of high-level language compared to e.g. YAML if your build configuration has 10 or more jobs.

I recommend to start with:

and tutorials: * https://confluence.atlassian.com/bamboo/tutorial-create-a-simple-plan-with-bamboo-java-specs-894743911.html * https://confluence.atlassian.com/bamboo/tutorial-bamboo-java-specs-stored-in-bitbucket-server-938641946.html

PS: Java is just a language. You can write Bamboo Specs in Groovy, Kotlin, Scala or any other programming language for JVM. It’s up to you. And if you really really want to stick with YAML (why?), there is a (simplified) version of Bamboo Specs for YAML.

Cintia

25/10/2018

Hi Marek,

Well. No, I don’t believe configuration is code. I don’t even think build is configuration, but rather state. But turns out I’ve already wrote on it. https://cintia.me/blog/post/build-as-code/

I’m not sure why you had the impression I’m not using Bamboo specs; I do have probably more than 20 builds using Bamboo specs, I’ve been personally attempting to get the rest of the company to adopt it, and I must say, it has been a pretty bad experience.

I could get half of the company to do bits and pieces on cloudformation, even puppet, and monitoring. But bamboo specs has such a big learning curve for non-JVM developers, that it’s hard to even grab people’s attention.

For non-devs (manual QA, Ops) or even juniors from other languages, they cannot even understand where to start. We now even have a common library to try to minimize the pain, which it feels to me as a massive failure. I’m not even convinced it’s any better than before.

My previous company decided to drop bamboo because of that; node and python developers couldn’t even imagine they’d need to install eclipse and maven to change build configuration. I cannot blame them on that, while I still believe it was just the cherry on the top of the cake.

I never needed modules for my plans. I needed macros (or templates), that’s all. I never wanted a full blown SDK. I can do ansible, I can do terraform, and I never needed code completion or any fancy IDE.

Every single time I present a colleague with bamboo specs, I get side-eyes . I try to say that it’s hard at the beginning, and it will eventually pay off. I say that because I want to believe, but it’s a lie. I still hate every single time I need to change a Bamboo build.

I know I’m not the only one: https://jira.atlassian.com/browse/BAM-15087

Bamboo has become a joke in the devops meetups. It’s not really what I wanted to see.

And the bamboo specs yaml is broken. So many things are lacking there that it’s almost useless.

Daniel Martins

24/09/2018

Great writeup!

We changed CI tools once in my current company, about two years ago - from CircleCI to a “on-prem” Jenkins setup - in order to have more control over the caching mechanisms (Docker, NPM, etc, things that were difficult or plain impossible to do in CircleCI at the time) in order to improve build times, and we did end up with drastically faster pipelines.

But as you said, there is no such thing as a free lunch: managing a Jenkins cluster to ensure it is up and running when people need it is hard work, but in the end, I still see this migration as a positive endeavor.

Say something